I was working with replication today and testing out some commands when I ran across some strange behaviors with the monitor sproc. I’m going to outline what I’ve done here in an effort to document it and hopefully receive some sort of explanation as to what is happening.

Environment:

I’m running the following versions for this test:

Microsoft SQL Server 2008 R2 (SP1) – 10.50.2500.0 (X64) Jun 17 2011 00:54:03 Copyright (c) Microsoft Corporation Developer Edition (64-bit) on Windows NT 6.1 <X64> (Build 7601: Service Pack 1)

I have multiple instances located on the development machine that I am using, but here is the relevant setup information:

Instance Name: DEVELOPMENT08

Publication Database: AutomationTestDB_Master

Publication Name: AutomationTest_TRANS

Publication Details: Transactional, Push

Distribution Agent: Continuous, located on same instance (DEVELOPMENT08)

Instance Name: DEVELOPMENT08_01

Subscription Database: AutomationTestDB_Replicate1

Instance Name: DEVELOPMENT08_03

Subscription Database: AutomationTestDB_Replicate3

Producing the behavior:

After profiling the DEVELOPMENT08 instance while using the replication monitor, I found that it inserts a tracer token by executing the following command:

EXEC dbo.Monitor_Replication @publication = [AutomationTest_TRANS]

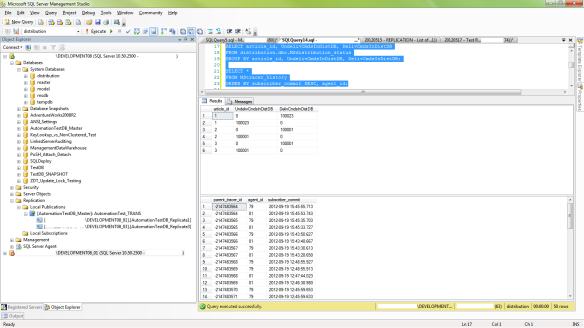

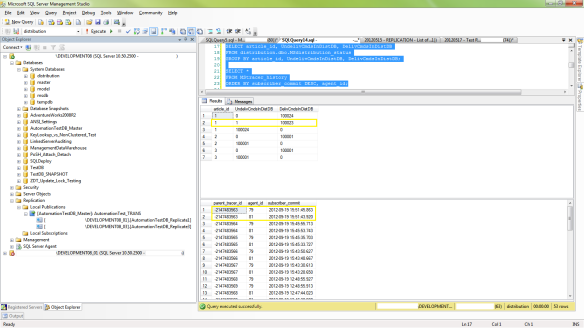

With replication running and all subscribers up-to-date, when I execute this command I get the following output:

This information can also be seen on the ‘Tracer Token’ tab of the Replication Monitor for this publication:

Everything is as expected – the tracer token was sent to the distributor and returned while reporting latency along each stop in the path. The problem appeared when I started testing what happens if there is a simulated network outage.

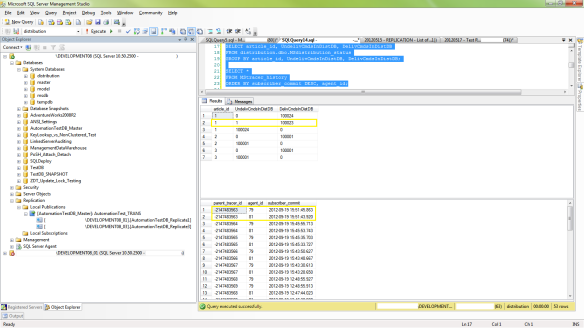

To simulate the network outage, I stopped one of the subscriber instances (DEVELOPMENT08_01) and ran the replication monitor procedure. I expected to get a message indicating failure here, but much to my surprise, it was reported that the token made it to the subscriber and back without any issue:

WHAT JUST HAPPENED HERE? I inserted a token and was told by the monitoring sproc that it came back, but when looking at the commands, it shows that there is an undelivered command. There is absolutely no other traffic on this machine as it is kept in a virtual development environment that I have full control over. As stated before, I expected to see a failure indicating that the subscriber was unavailable, or at the very least see the procedure continue to run.

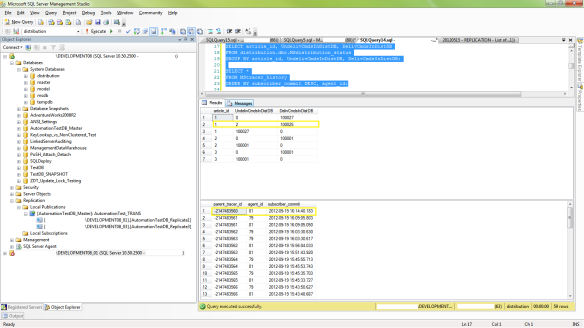

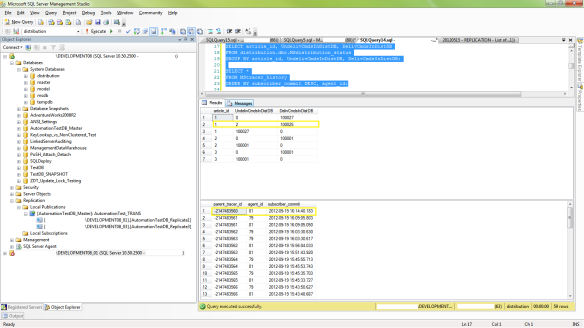

Now I was curious, so I ran the monitoring sproc again to see what would happen:

This time the sproc just continues to spin, and there are now two undistributed commands. Why didn’t it complete this time? I haven’t changed anything about what I am doing here – same procedure as with the first token, but this time the monitoring sproc continues to wait for the token to return (as I expected it to do with the first token).

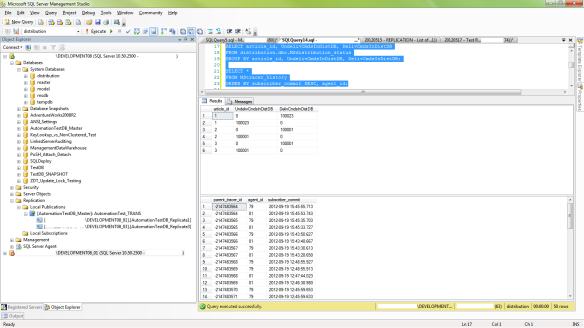

After allowing this to run for about 7 minutes or so, I started the subscriber instance and observed the following:

The tokens went through, the sproc completed, and there were no undistributed commands left once replication had a chance to catch up.

My next step was to test if this was just a byproduct of executing the command from T-SQL rather than through the replication monitor GUI. I stopped the subscriber (DEVELOPMENT08_01) again, and used the replication monitor GUI to insert a tracer token:

As seen here, even though replication monitor shows that there is an issue with replication (denoted by the red stop sign icon), the token was reported as having gone through to the subscriber. Unfortunately, that token is still showing up as an undistributed command, just as in the case when executing this from the T-SQL query.

I wanted to replicate the first case again, so I inserted another token without starting the subscriber instance and observed the following:

This time the replication monitor GUI is showing a ‘Pending…’ status and there are now two undistributed commands showing. Just as in the first example!

Following the first example, now it is time to start the subscriber again and observe the results:

Everything is caught up and the tokens have come through!

What is going on here and why does this sproc return successfully on the first attempt when the subscriber is powered down? I cannot understand this behavior at all and am beginning to think this is a bug.

If anyone can offer any insight into this behavior then it would be greatly appreciated – I’d really like to know what is going on!